Gergő Nagy

· 6 min read

The AWS MCP Servers Have Arrived – Overview

AWS has introduced MCP servers that let you interact with AWS services using natural language. Read our first impressions here.

Introduction: How do we get from monolithic AI systems to MCP?

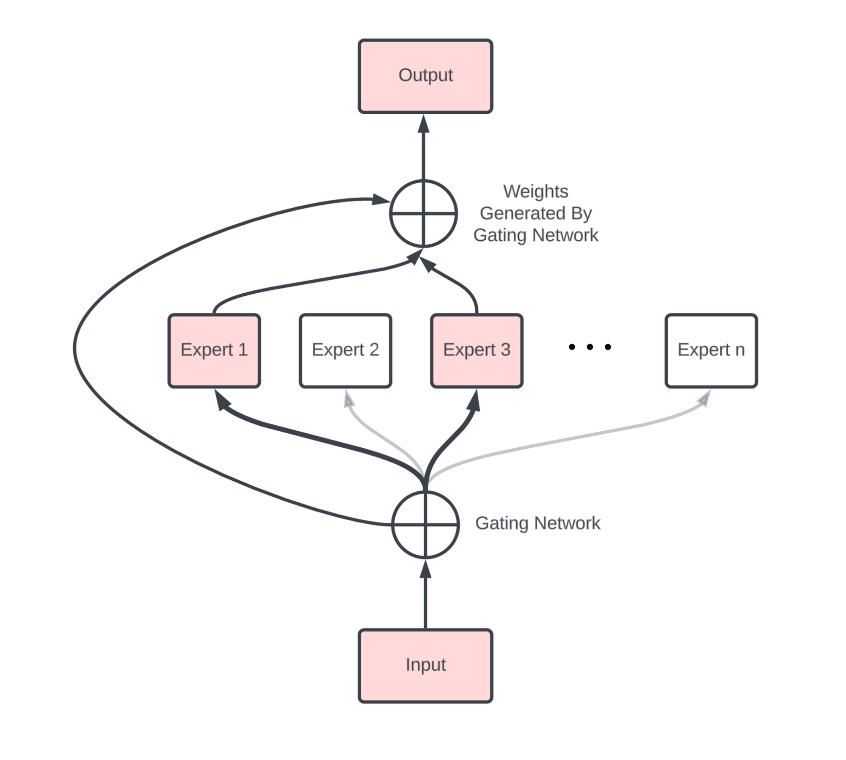

The main disadvantage of monolithic architectures is the tight coupling between processes, making it difficult to replace or update components. Microservices were created in response to this challenge. This pattern can be mapped quite accurately onto the AI world as well: for example, the Mixture of Experts (MoE) FFN can be an alternative to the Dense FFN (Feedforward Neural Network), loosening dependency through a load balancer that distributes tasks across smaller, more specialized models.

Agentic AI also fits into this pattern, but in this case it’s not an alternative, it’s an augmentation. The Agent is a local extension of the model that can run even on a remote resource. When the Agent asks, the model answers. We can distinguish between their two behaviors: the Agent is proactive, it can operate in loops, perceive its environment, evaluate the next steps, and execute them. In contrast, Generative AI is reactive, it answers a question or generates an image or video. There are several types of Agentic AI, the most advanced today is the Learning Agent.

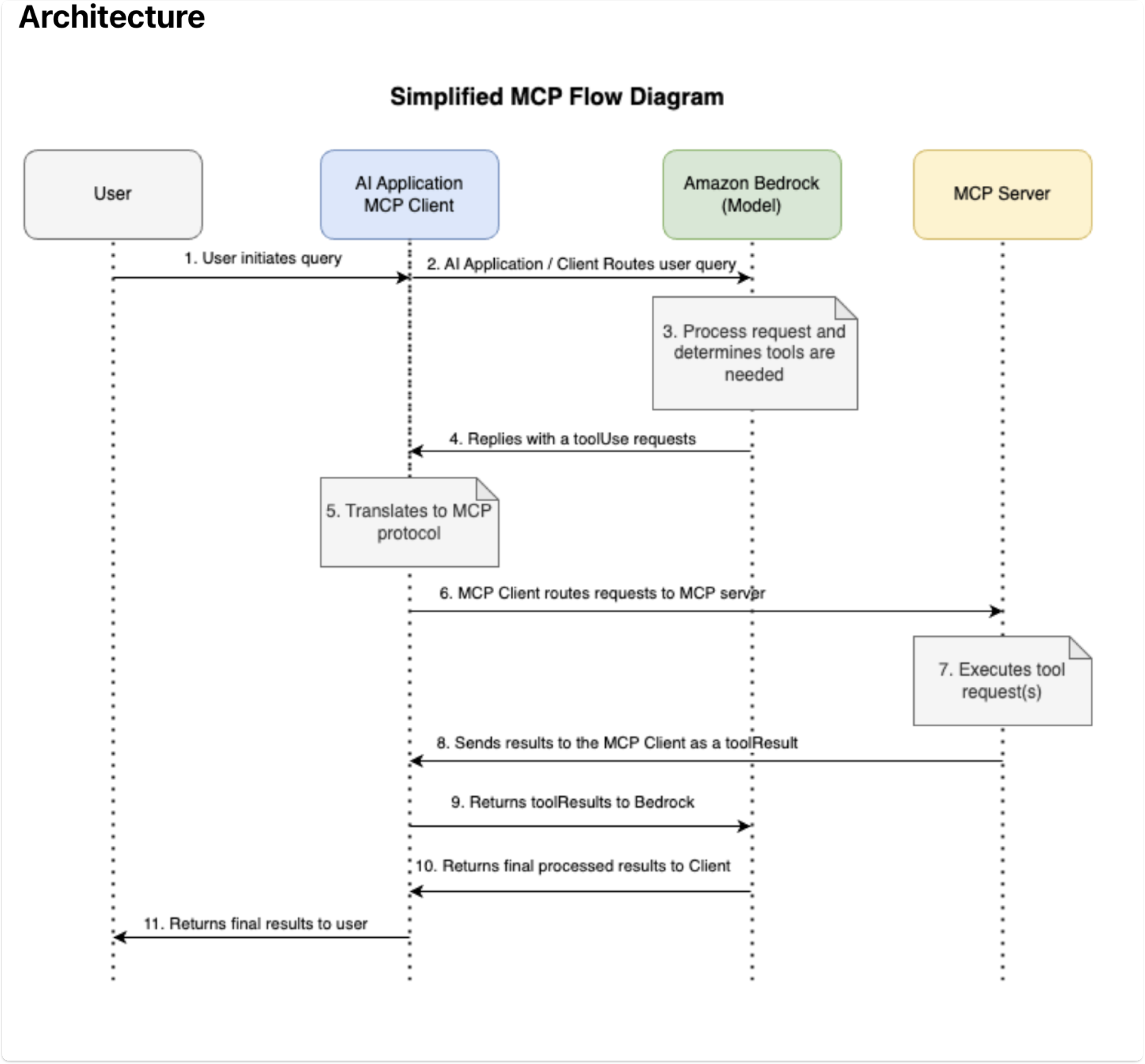

Such an agent includes multiple modules, each responsible for different tasks. With this modular system, it can keep track of the state of the environment, the consequences of past actions, and can estimate solutions to upcoming tasks while trying to predict their impact. The agent, however, is only an extension of the model; the model itself is stateless, so with each new exchange the entire context must be sent to the model. Based on this context, the model responds, the agent receives the response, and the next loop begins.

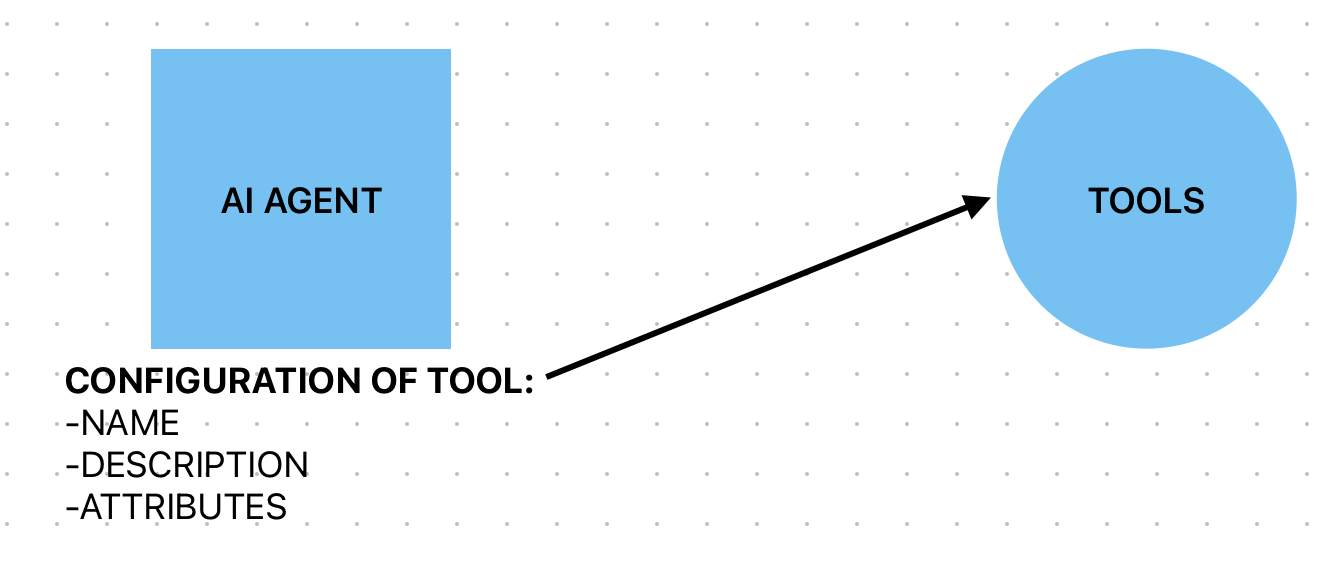

If only two actors participate in this process, the balance still tips heavily toward the model side, because the model must know what tools are needed for the tasks assigned to the agent. This knowledge must appear in the model as natural language text, introduced during training which is a long and extremely expensive process.

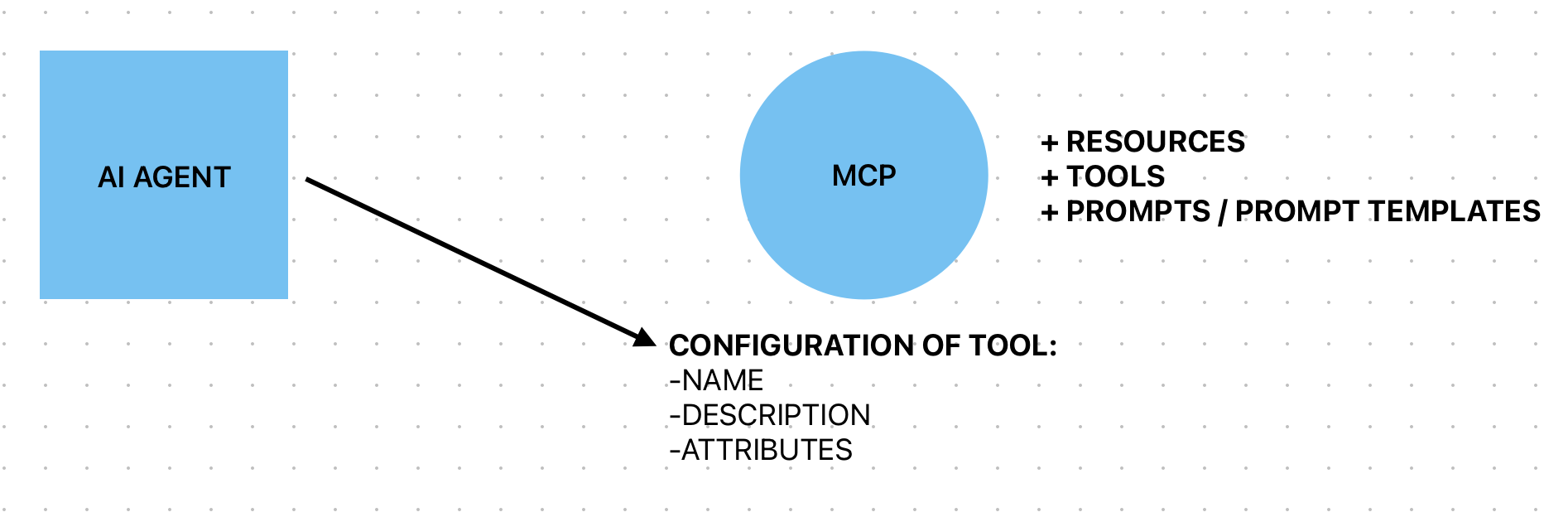

This is problematic, because in such a setup we cannot adapt quickly enough to the evolution of tools. Tools here mean any external software that we use via GUI, CLI, or API. MCP aims to solve this: it is often described as a virtual USB connector. Its purpose is to deliver, via context, knowledge on how to use tools, whether usage instructions or background information.

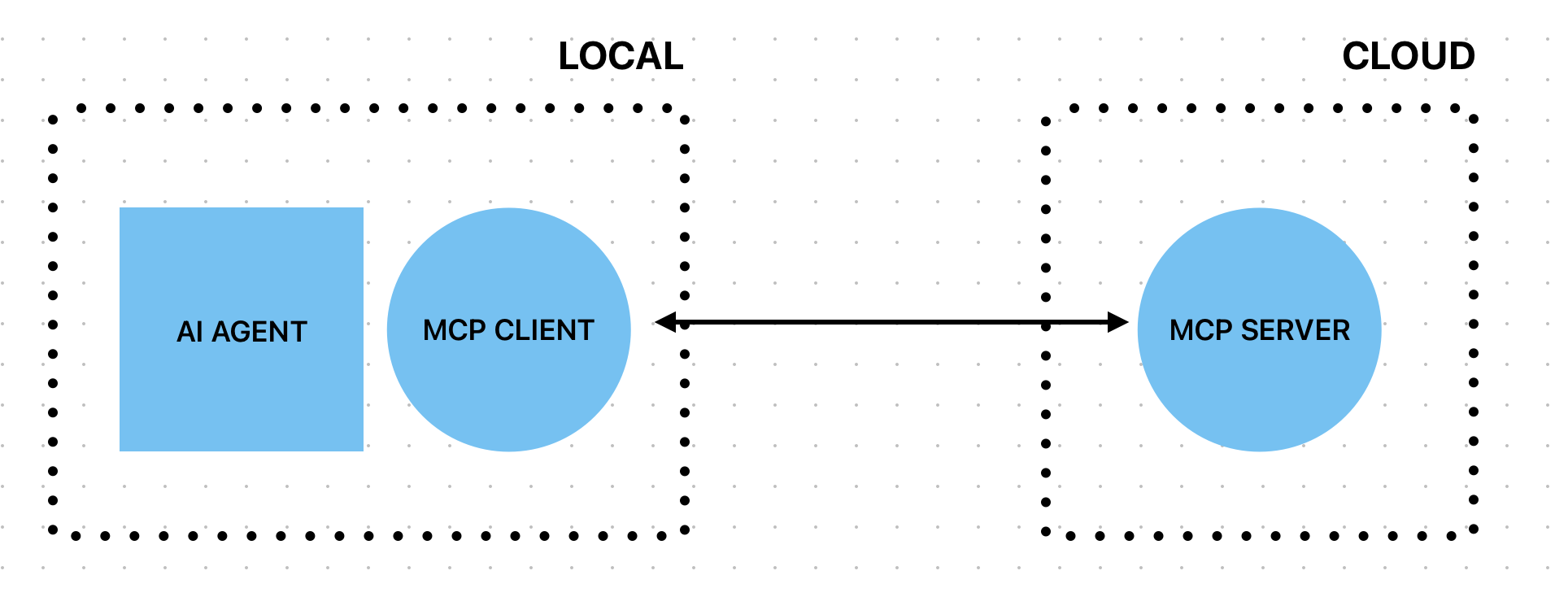

MCP itself can be decomposed into server and client sides: the server can run locally in a container or on a remote resource, and the client can run alongside the agent. This allows easy configuration for different clients.

How does AWS imagine MCP integration?

It’s debated whether it’s a good idea to entrust any part of our infrastructure to AI. To answer this question, we reviewed all 60 MCP servers; colleagues experimented with the AWS EKS MCP Server and others through Proof of Concept projects, and we also examined the Prompt Library. But why exactly do we need 60 different MCP servers?

As you may guess from the introduction, MCP communicates through context, which is often extremely limited — and the larger the context, the more expensive it becomes. As a result, many MCP servers would send a lot of information about tool usage and descriptions, which increases hallucination risk and cost. Therefore, it’s wise to use only the most essential ones.

AWS Labs organizes its MCP servers into eight categories, from documentation to infrastructure and cost optimization. The descriptions are clear and well structured, and thanks to schema-based formats they’re easy to navigate. However, a general observation is that servers capable of writing — i.e., modifying infrastructure — come with prominent warnings. The authors strongly discourage using them in production, recommending instead sandbox or PoC environments.

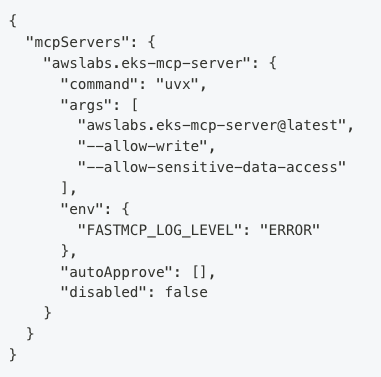

We used Amazon Q Developer or Kiro-CLI as the Agent. Communication works either through STDIO or SHTTP depending on the server. Most servers allow configuration through environment variables and arguments; you can set allow/deny lists to control read/write operations or access to sensitive data.

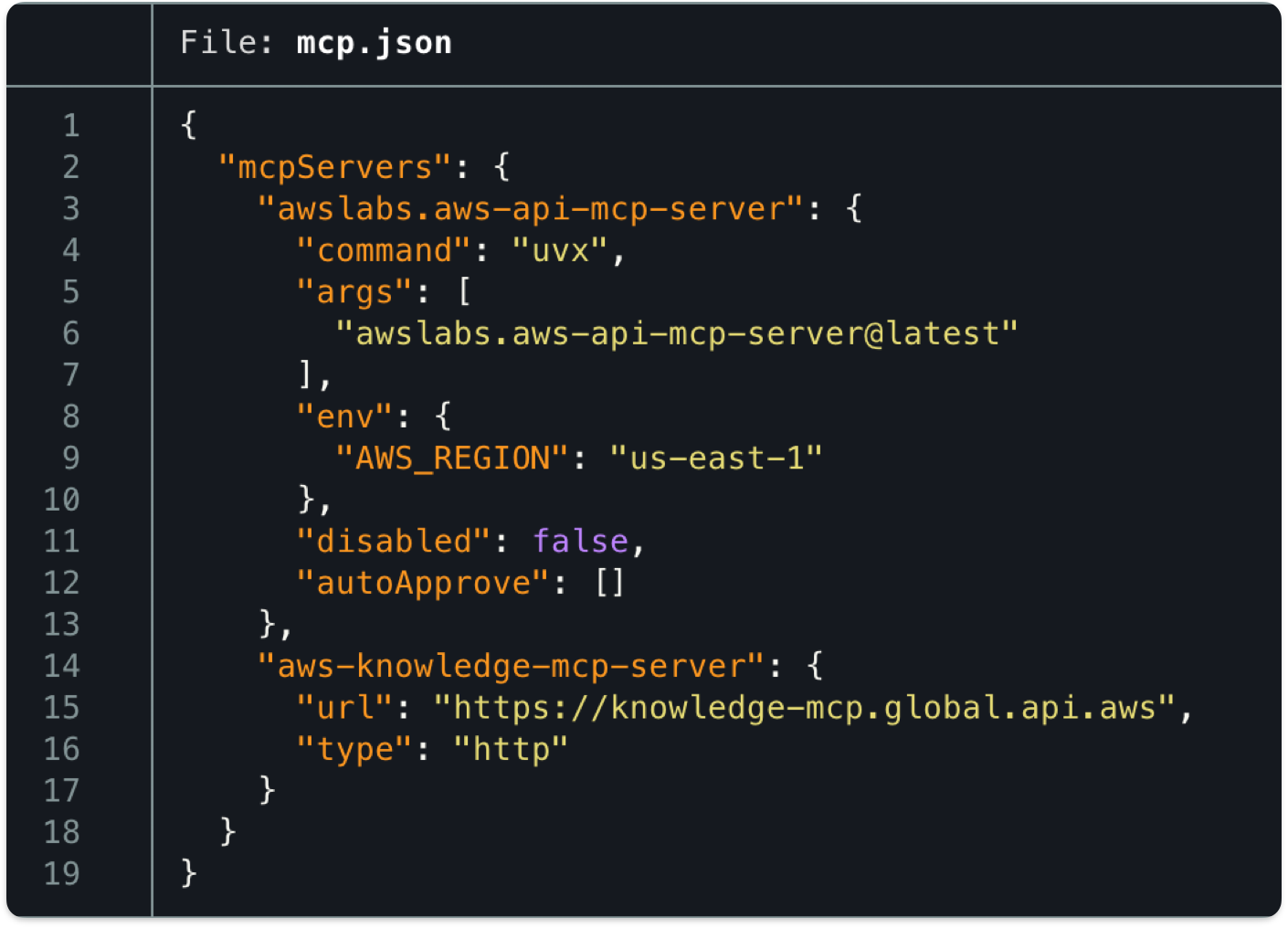

You can configure them under ~/.aws/amazonq/mcp.json. Each server has different parameters. To understand a server’s capabilities, it’s useful to check not only the features but also the tools list for a more complete picture.

Concrete AWS solutions

The two fundamental servers are the AWS API MCP Server and the AWS Knowledge Base MCP Server. These help the agent use the AWS CLI and access official documentation. There’s significant overlap among MCPs, but their implementations differ. One of the most capable and recent servers is the AWS Cloud Control API MCP Server, which is among the most interesting in both implementation and capabilities.

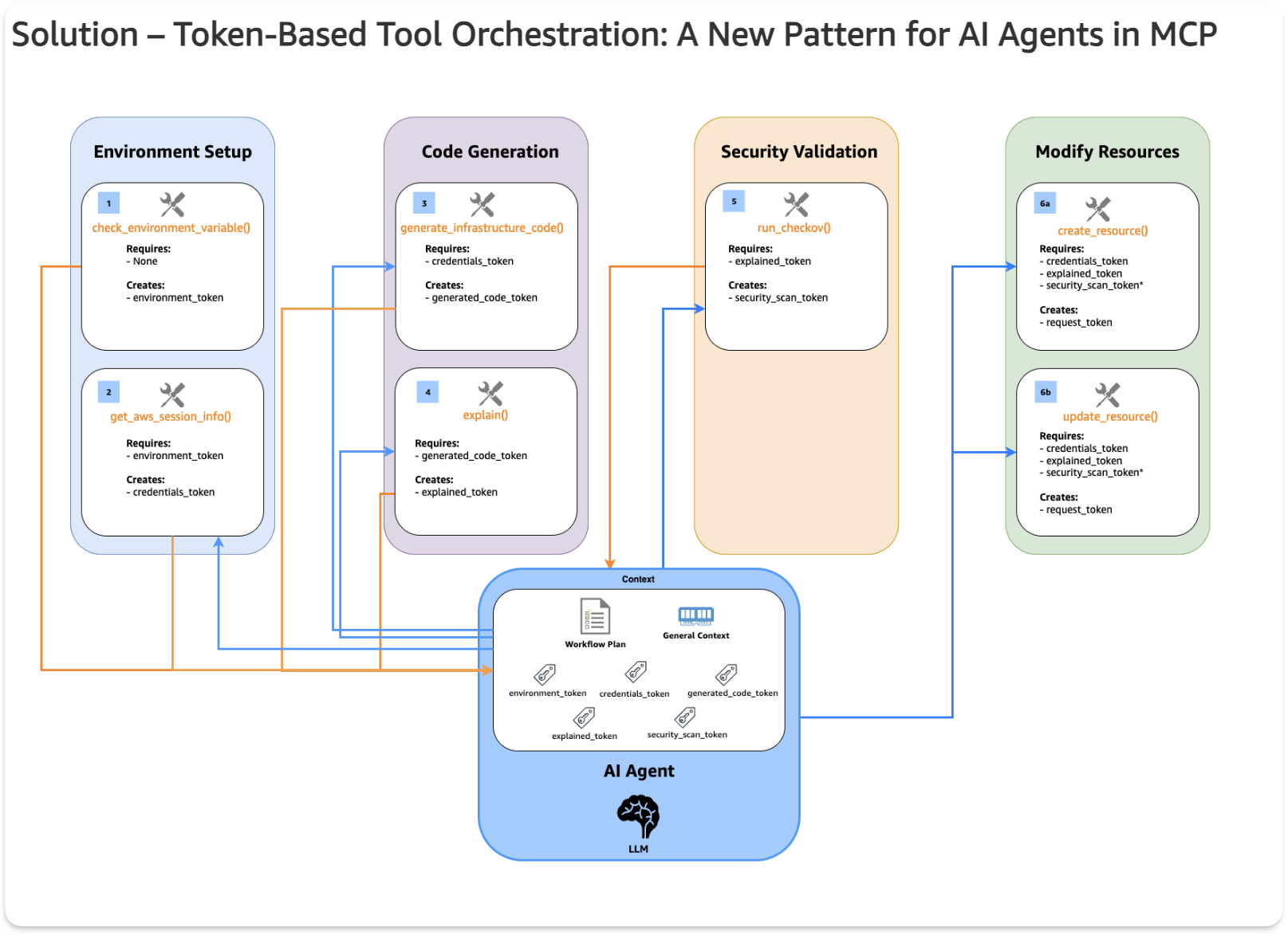

The solution essentially links tool usage to the acquisition of tokens; the agent must satisfy prerequisites like data gathering or preparation, enabling it to handle complex tasks more effectively. This is a general-purpose server performing CRUDL operations on any AWS resource.

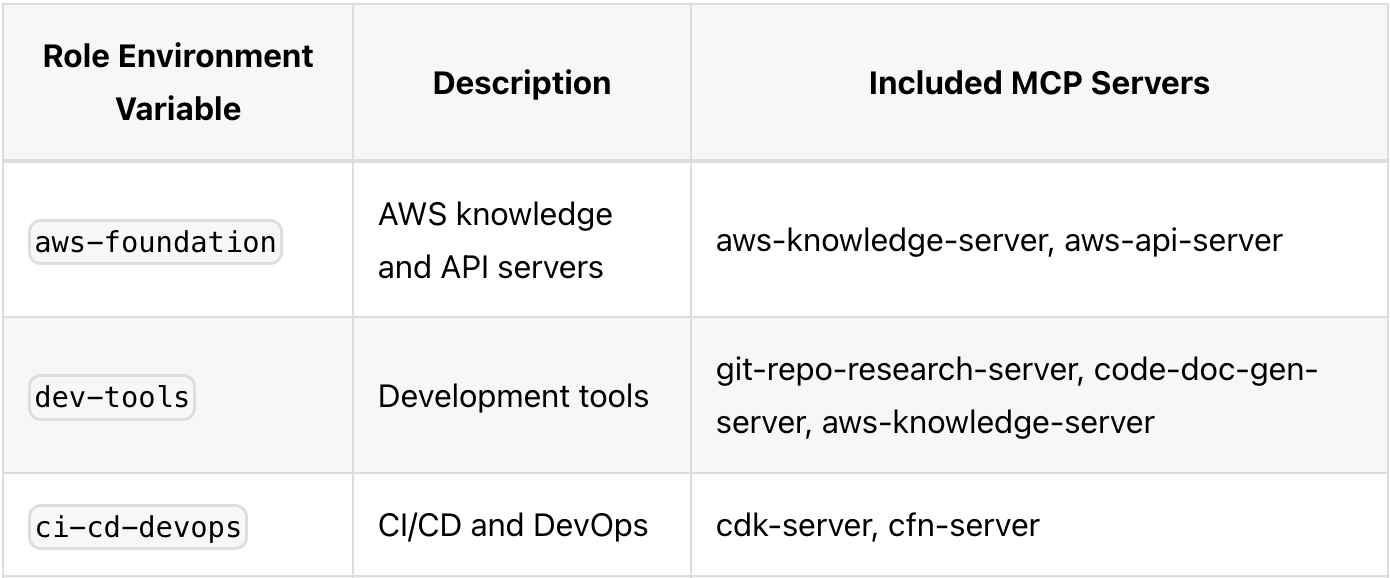

Another unique solution is the Core MCP Server, which works as a proxy. You must define roles for its usage, also provided via environment variables. The documentation shows which role enables which additional MCP servers. This is useful if you don’t want to constantly modify your local mcp.json.

The last category is the bridge MCP servers, which function as API mappers. Care is required with their permissions and handling. Examples: AWS Lambda MCP Server, AWS Step Functions Tool MCP Server, Amazon SNS SQS MCP Server. Their advantage is that all resource access remains within AWS’s internal network without touching the public internet. From a security perspective, this approach enforces separation of duties: the model can invoke Lambda functions but cannot directly access other AWS services. The client needs only AWS credentials to invoke Lambda; the Lambda functions can then access other AWS services using their assigned execution roles.

Summary

AWS released many servers at once; their goals and functionality differ significantly, though overlaps are frequent. This may be because MCP is a new technology with many issues and changes week by week, sometimes fundamentally.

The most useful servers:

- AWS API MCP Server (for simple CLI usage by the agent)

- AWS Knowledge MCP Server (for research and information gathering)

- AWS Cloud Control API MCP Server (for more complex operations under tight constraints)

For specific tasks, it’s worth trying resource-dedicated servers with caution. The documentation recommends configuring AWS_API_MCP_PROFILE_NAME for easier permission management and activity tracking.

Sources:

- AWS MCP Servers

- Controlled Tool Orchestration

- Model Context Protocol

- Hangvezérelt AI - az MCP és az AWS környezet - az MCP és az AWS környezet

- AI Agents

- Mixture of Experts

- Granite 4.0 - IBM Granite

Are you interested in this topic? Do you have any questions about the article? Book a free consultation and let’s see how the Code Factory team can help you, or take a look at our services!